In Just 3 Days, I Used GPT-4 to Generate the Top-Performing Golang Worker Pool on the Internet, Easily Defeating a 10k+ Project on GitHub

1. I Wrote an Awesome Open Source Project

If you understand Chinese, please jump to 《仅三天,我用 GPT-4 生成了性能全网第一的 Golang Worker Pool,轻松打败 GitHub 万星项目》 to read. This article was first written in Chinese and then translated into English.

With an excited heart and trembling hands, I used DevChat to take advantage of GPT-4 and wrote the coolest and most artistic thousand lines of code in my life!

I wrote a powerful and easy-to-use Worker Pool program in Golang, named GoPool!

It appears to be feature-complete, performs well, is simple to use, has elegant code, and comprehensive documentation…

Be modest, calm, restrained, don’t give people a chance to criticize… Can’t restrain, can’t restrain, it’s just awesome, super awesome! The complete prompts are at pro.devchat.ai

1.1 Look at This Performance

- With a million tasks and ten thousand concurrent, GoPool outperforms the ten-thousand-star project ants and the thousand-star project pond on GitHub:

| Project | Time to Process 1M Tasks (s) | Memory Consumption (MB) |

|---|---|---|

| GoPool | 1.13 | 1.88 |

| ants(10k star) | 1.43 | 9.49 |

| pond(1k star) | 3.51 | 1.23 |

You might not believe it. But don’t doubt it, the complete testing process and test code are provided below. This way, you can run it on your own computer. If GoPool loses, you can slap me in the face. If GoPool wins, you can give it a star!

1.2 Look at These Features

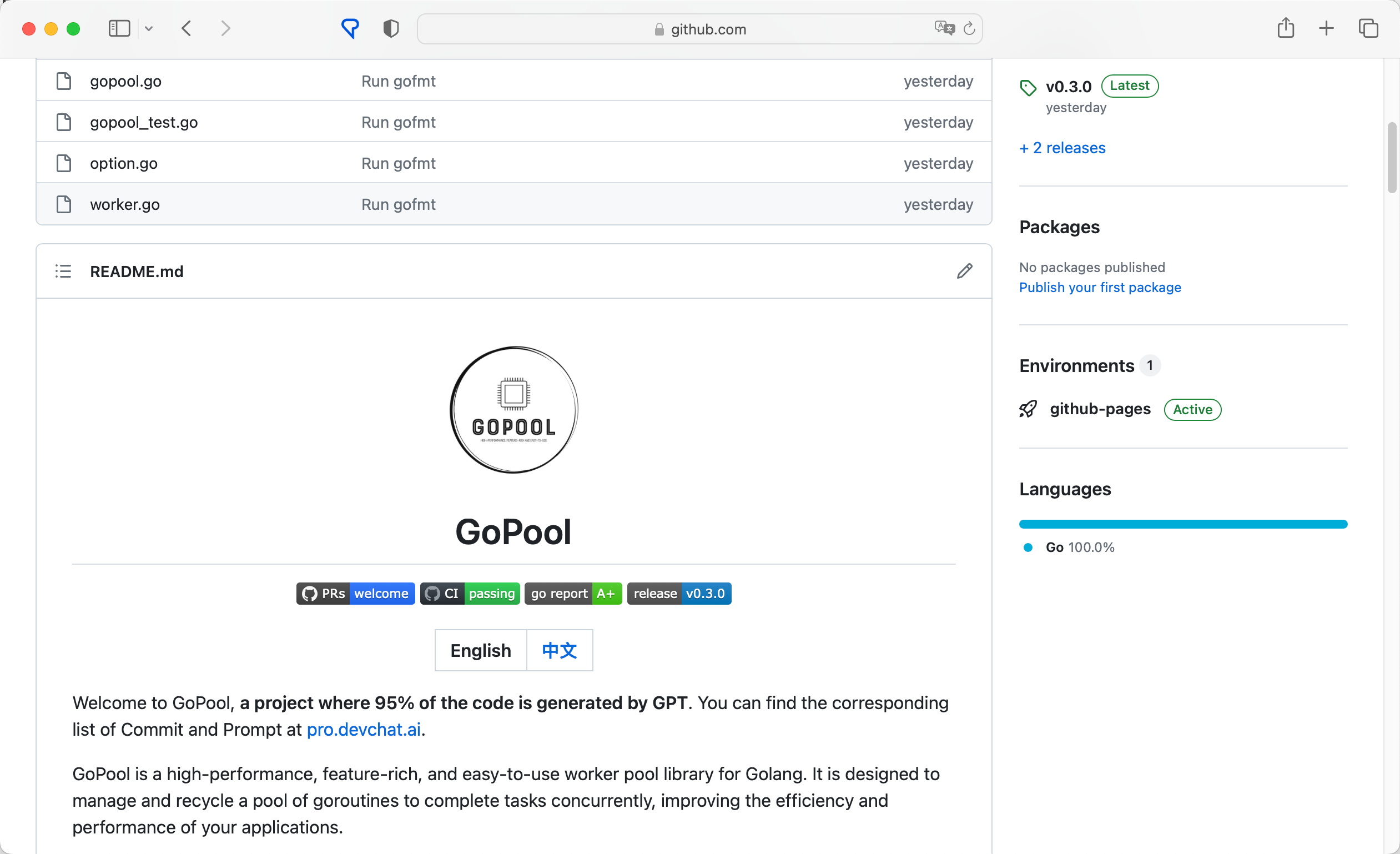

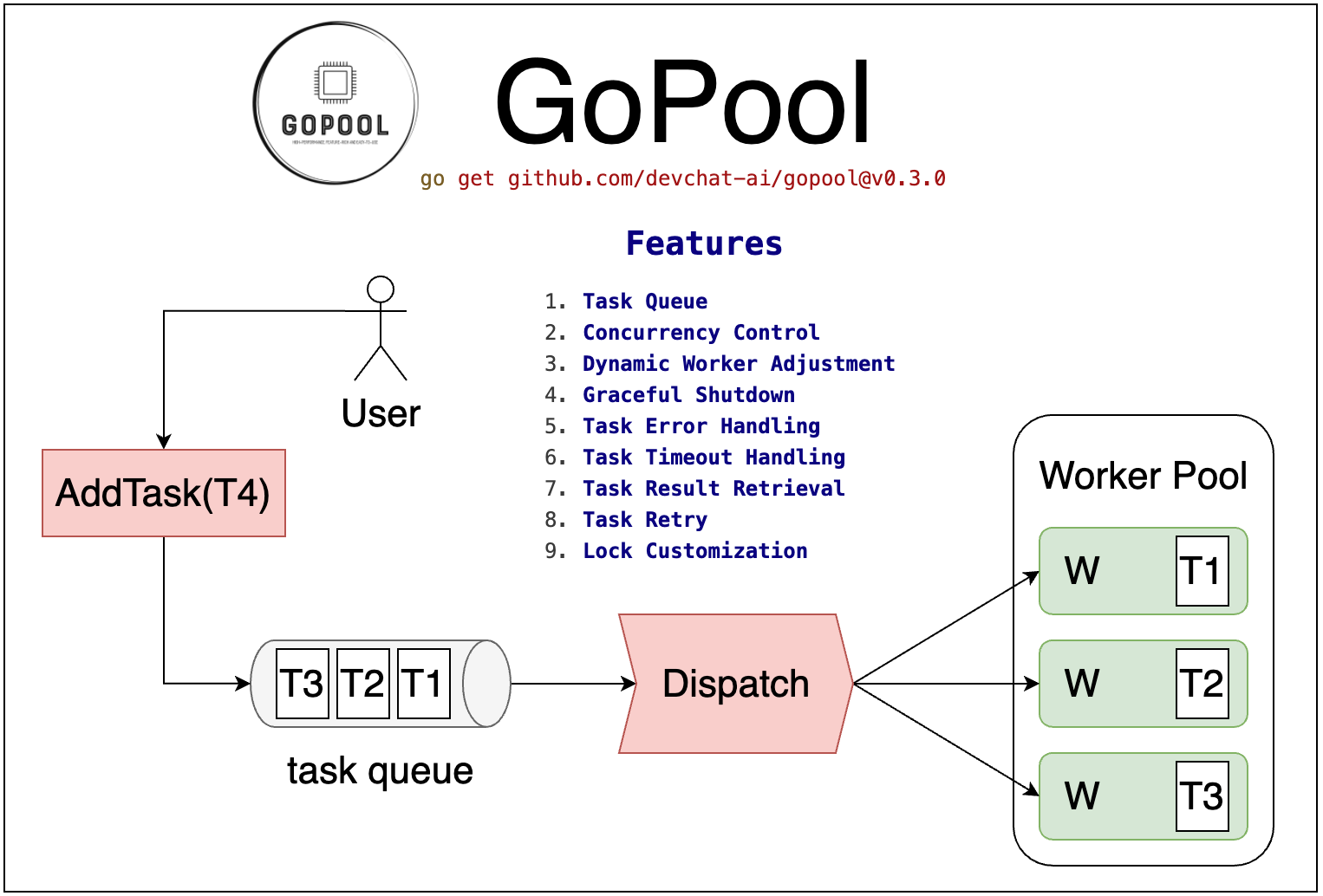

- Isn’t this homepage refreshing?

(This is a project that was completed in just three days. It has complete Chinese and English documentation, complete functional test code and performance test code, a logo, CI… Can you believe it?)

- Aren’t these features comprehensive?

Detailed feature sets and development process introductions are provided below.

1.3 Guess What I’ve Experienced in These 100 Days

Rewind to a week ago, at that time, I had been “freeloading” GPT-4 for over 100 days. During these three months, I tried to get GPT-4 to complete various tasks, such as:

- Let GPT-4 write Terraform configurations;

- Let GPT-4 teach me how to deploy SaaS services on AWS;

- Let GPT-4 write Python web project code;

- Let GPT-4 write Golang cli project code;

- Let GPT-4 write various operation and maintenance scripts;

- Let GPT-4 complete unit tests;

- Let GPT-4 assist in refactoring code;

- Let GPT-4 provide suggestions and advice for my blog;

- Let GPT-4 teach me how to write concurrent programs;

- …

After using it every day for 100 days, I basically know where the upper limit of GPT-4’s capabilities lies, what it is suitable for, what it is not suitable for, what it can do, and what it cannot do. At the end of the hundred days, I decided to use GPT-4 to generate this relatively complex Worker Pool project from scratch, demonstrating “how much of a role GPT-4 can play in a real software project” (at the same time, see if I can use GPT-4 to generate the most awesome, easiest to use, and most powerful Golang Worker Pool library on the internet)

What? You’re more interested in how to “freeload” GPT-4 at this moment? Alright, take the “Dragon Slaying Saber” without thanks:

- “Dragon Slaying Saber” instruction manual: 《TODOOOOOOOOOOOO》

- “Dragon Slaying Saber” registration link: Click me

- By registering through the above link, you can use DevChat for N days for free (the exact number of days is uncertain, anyway, this is the public test entrance, just shear the wool as much as you can)

2. How Long Has It Been Since You Last Wrote a Concurrent Program?

As we all know, concurrent programming is a highly challenging task, here are some reasons:

- Non-determinism: The behavior of concurrent programs can become unpredictable due to slight changes in the scheduling order or timing of threads. This makes it very difficult to reproduce and diagnose problems.

- Race conditions: Errors in concurrent programs are often caused by race conditions, which only occur under specific thread interaction orders. These conditions can be difficult to reproduce and identify.

- Deadlocks and livelocks: Concurrent programs may encounter problems with deadlocks (two or more processes or threads indefinitely waiting for each other to release resources) or livelocks (processes or threads constantly changing state in response to each other’s behavior, but not doing any substantive work). These problems can be difficult to diagnose and solve.

- Performance issues: Concurrent programs may encounter various performance issues, such as thread contention, excessive context switching, etc. These problems may require complex tools and techniques to diagnose.

Writing a good concurrent program requires developers to have deep theoretical knowledge and practical experience. Although concurrency is cool, debugging can be hair-pulling. For many simple tasks or applications that do not require high performance, single-threaded programming is completely sufficient. Concurrent programming will increase the complexity of the code and the places where errors may occur, so if it’s not necessary, don’t do it concurrently.

“Unless necessary, don’t do it concurrently”, but “most things in life don’t go as planned”, and the “necessary” scenarios always come unexpectedly. In Golang, the threshold for “concurrency” is very low, just a go keyword can open a new goroutine (which can be simply understood as a lightweight thread in Golang, or a coroutine):

|

|

However, in actual enterprise-level projects, we often can’t solve concurrent task processing by just adding a go, but also need to consider:

- Control the level of concurrency, don’t let your massive tasks exhaust system resources;

- Reuse goroutines to reduce performance overhead (although the overhead of goroutines is indeed small);

- Gracefully handle task queues;

- Performance tuning (perhaps you can adjust the size of the goroutine pool based on system load);

- Better error handling (how to handle after a task fails);

- …

Obviously, I didn’t list everything, I believe you have thought of other such problem points. In short, to solve these problems, you have written Worker Pool programs more or less by yourself, or used Worker Pool libraries written by others.

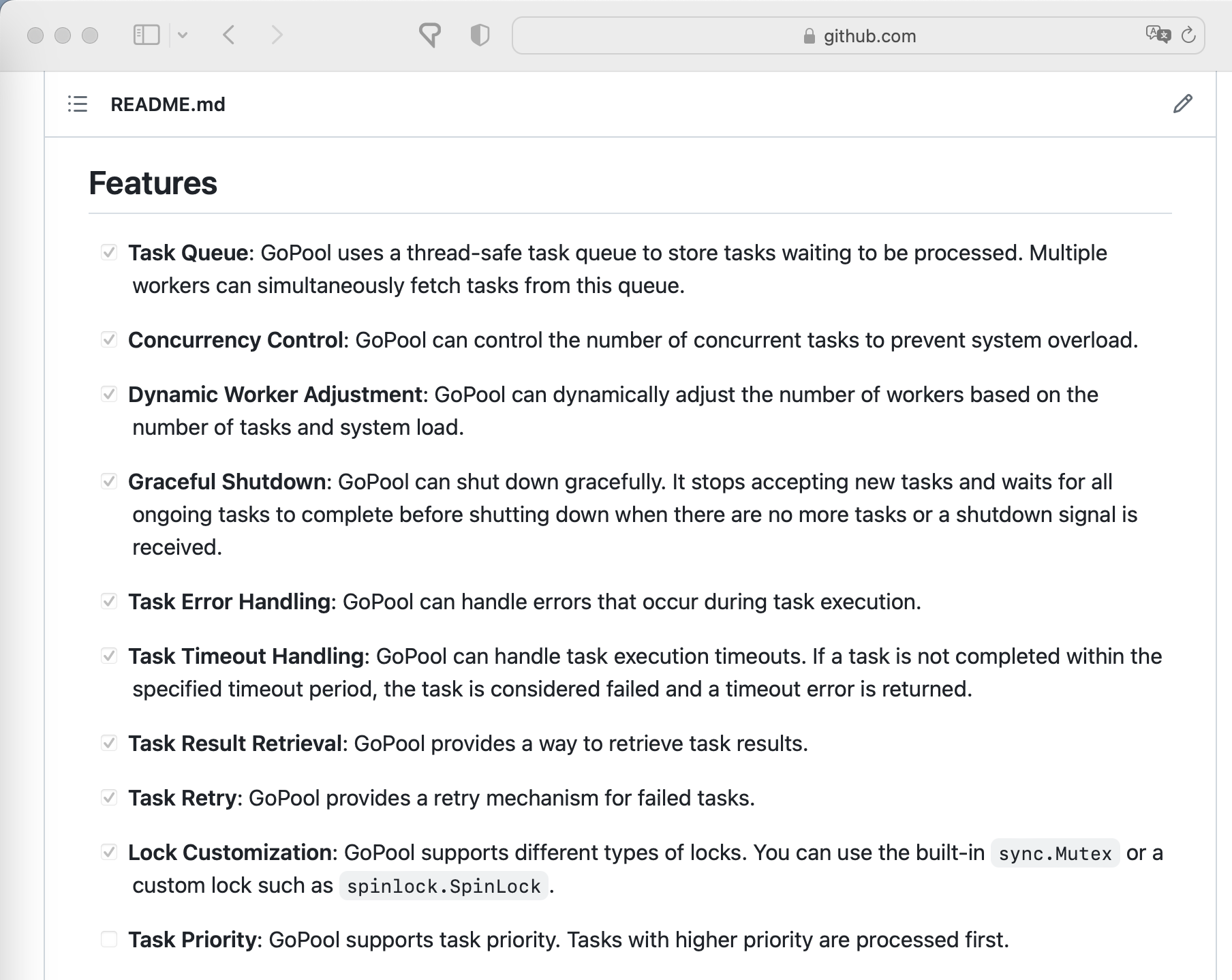

3. Question: What Features Should a Worker Pool Program Include?

Please think: In Golang programming, the Worker Pool concurrency pattern is often used. What features should a high-performance, feature-complete, and easy-to-use Worker Pool program include?

I don’t know if you have stopped to think about the answer to this question before continuing to read. Anyway, I don’t think, I’m lazy, I have the Dragon Slaying Saber, I choose to throw this question to GPT-4:

As shown in the figure, GPT-4 summarized a bunch of features:

A high-performance, feature-complete, and easy-to-use Worker Pool program in Golang programming should include the following features:

Task Queue: The Worker Pool needs a task queue to store tasks to be processed. This queue should be thread-safe and support multiple workers taking tasks from it simultaneously.

Dynamically Adjust the Number of Workers: The Worker Pool should be able to dynamically increase or decrease the number of workers based on the number of tasks and the load of the system.

Graceful Shutdown: When there are no more tasks to be processed, or when a shutdown signal is received, the Worker Pool should be able to shut down gracefully, i.e., stop accepting new tasks and wait for all tasks that have already started to complete before shutting down.

Error Handling: The Worker Pool should be able to handle errors that occur during task execution, for example, it can provide an error callback function.

Task Timeout Handling: The Worker Pool should be able to handle the situation where a task execution times out, for example, it can set a timeout period, and if the task is not completed within this time, it is considered a failed task.

Task Priority: The Worker Pool can support task priority, giving priority to tasks with higher priority.

Task Result Retrieval: The Worker Pool should provide a way to get the results of tasks, for example, it can provide a result callback function.

Task Retry: For failed tasks, the Worker Pool can provide a retry mechanism, for example, it can set the number of retries and the retry interval.

Task Progress Tracking: The Worker Pool can provide task progress tracking, for example, it can provide a progress callback function, or provide a method to query the current task progress.

Concurrency Control: The Worker Pool should be able to control the number of concurrent tasks to prevent system overload.

It may not be the most comprehensive on the internet, but it’s definitely better than what I summarized.

Then I asked GPT-4 to rephrase this paragraph in English and put it in a Markdown block, so I could easily insert it into the README.md file and start “incubating” a cool open source project:

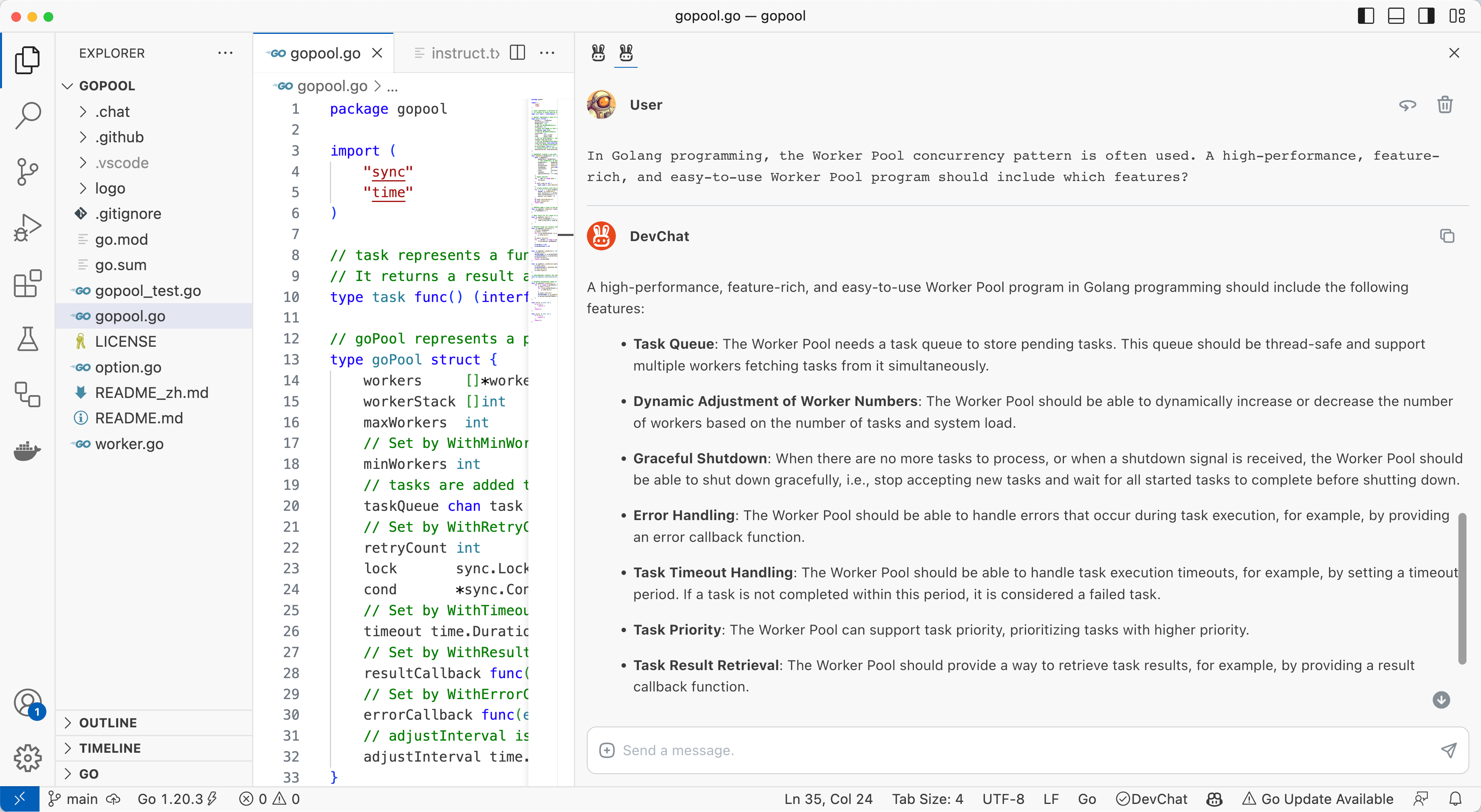

4. Let GPT-4 Write the First Line of Code!

GPT-4 listed a bunch of requirements earlier, let it implement the requirements it listed!

- Continue to command GPT-4 through DevChat

4.1 I Said to GPT-4

In the first version of the implementation, I think GoPool can include a task queue and concurrency control. I hope you can give the definitions of several main objects related to implementing these features, including the definitions of methods for structures and interfaces. I will give feedback based on your design, and you should start the specific coding implementation after getting my feedback.

4.2 GPT-4 Answered

Okay, based on your requirements, I suggest we can define the following main objects:

- Task: This is an interface that represents a task that can be executed. It has a method

Executefor executing tasks.

|

|

- Worker: This is a structure that represents a worker goroutine. It has a method

Startfor starting to execute tasks.

|

|

- GoPool: This is a structure that represents the entire Worker Pool. It has a method

AddTaskfor adding tasks to the task queue; a methodStartfor starting the Worker Pool; and a methodStopfor stopping the Worker Pool.

|

|

This is just a basic design and may need to be adjusted according to actual requirements. For example, you may need to add some error handling logic, or add some configuration options, etc.

4.3 I Said to GPT-4 Again

Here are some feedback for you:

- The Task interface has an Execute() method, but the tasks submitted by users when using GoPool may not necessarily implement this method, so I think Task can directly be a func() type.

- After AddTask(), GoPool should be able to automatically start assigning Workers to handle Tasks, rather than calling the Start() method again, so I think Start() is redundant.

- It seems more appropriate to use Release() for the release of the Pool, compared to Stop().

- Considering the simplicity of use, I think users should get a Pool after calling NewGoPool(), and then they can continuously add tasks by calling the AddTask() method of the Pool, and these tasks will be digested as quickly as possible.

Please rewrite the code considering the above opinions, and reasonably divide it into two source files: gopool.go and worker.go.

4.4 GPT-4 Gave a New Answer

Based on your feedback, I made some modifications to the code. Here is the modified code:

In the gopool.go file:

|

|

In the worker.go file:

|

|

In this design, Task is a function type, and users can directly submit a function as a task. The Start method in the GoPool structure has been removed, and processing will automatically start after adding tasks. The Stop method has been renamed to Release, which is used to release the Pool. Added NewGoPool and NewWorker functions, users can create a new GoPool or Worker by calling these two functions.

4.5 Submit the First Code Class Commit

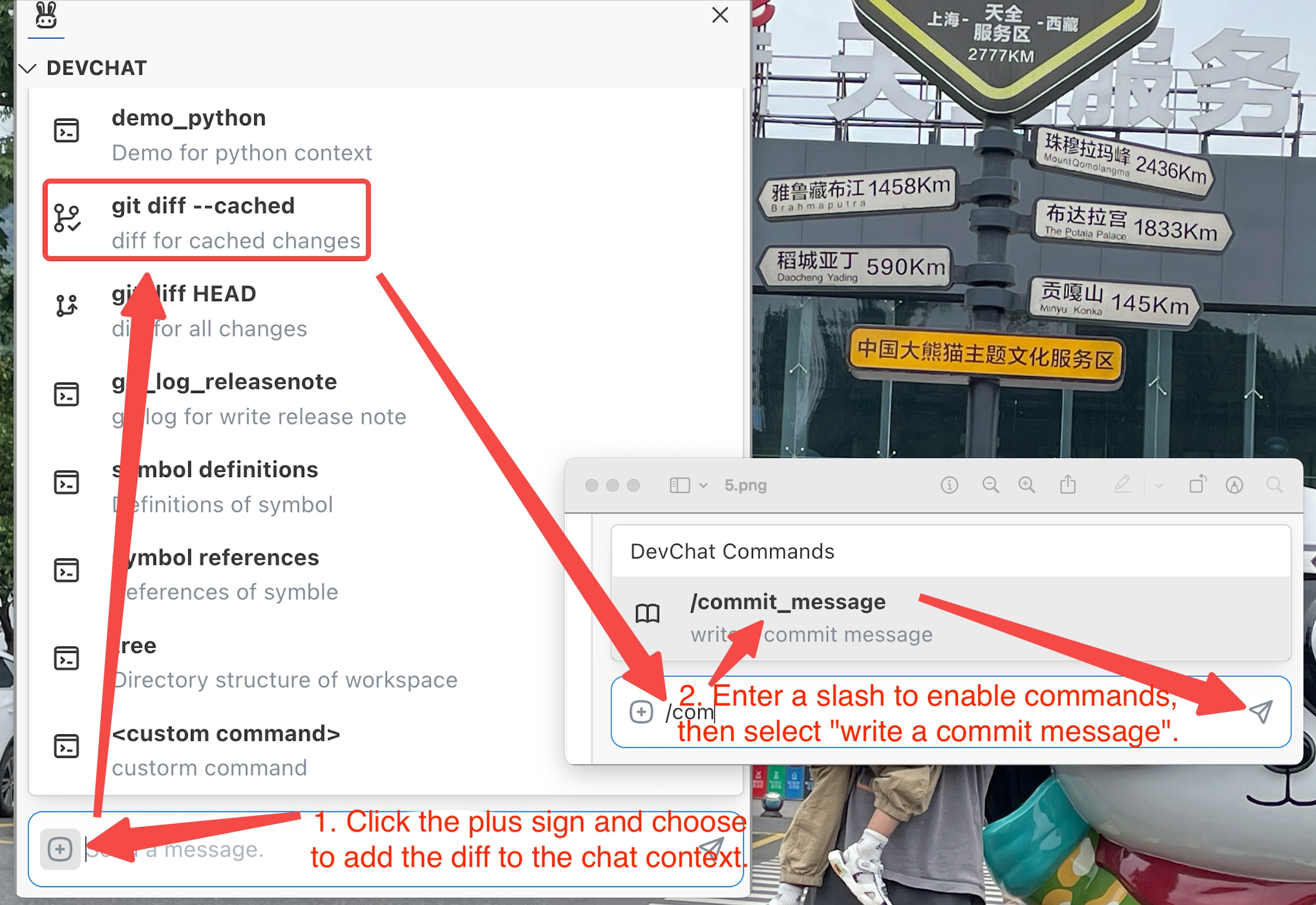

After two rounds of communication, I am quite satisfied with the first version of the code given by GPT-4, so I typed the git add --all command, and then let GPT-4 help me write the commit message:

Writing commit messages in DevChat is also very convenient. With a few simple steps, you can send the current code changes to GPT-4, and then let GPT-4 write a native English commit message in native English. The final answer given by GPT is as follows:

|

|

Note:

- You can see the complete prompts for all commits of this project at pro.devchat.ai;

- The prompt for the current commit is linked here.

5. After GPT Wrote 1000 Lines of Code…

In this way, I kept giving requirements to GPT-4, and then let it gradually complete the functions, including test cases, documentation, etc. After 20+ commits, the GoPool project became stable:

5.1 Let’s Look at the Current GoPool Feature Set

At the beginning, the only requirement that GPT-4 listed but was not implemented was “Task Priority”, because I temporarily think this is not a very common feature point (of course, if you need this feature, feel free to raise it in the issue list of the GoPool project, I will use GPT-4 again, and add this feature in minutes).

The current feature set is as follows:

-

Task Queue: GoPool uses a thread-safe task queue to store tasks waiting to be processed. Multiple workers can simultaneously fetch tasks from this queue.

-

Concurrency Control: GoPool can control the number of concurrent tasks to prevent system overload.

-

Dynamic Worker Adjustment: GoPool can dynamically adjust the number of workers based on the number of tasks and system load.

-

Graceful Shutdown: GoPool can shut down gracefully. It stops accepting new tasks and waits for all ongoing tasks to complete before shutting down when there are no more tasks or a shutdown signal is received.

-

Task Error Handling: GoPool can handle errors that occur during task execution.

-

Task Timeout Handling: GoPool can handle task execution timeouts. If a task is not completed within the specified timeout period, the task is considered failed and a timeout error is returned.

-

Task Result Retrieval: GoPool provides a way to retrieve task results.

-

Task Retry: GoPool provides a retry mechanism for failed tasks.

-

Lock Customization: GoPool supports different types of locks. You can use the built-in

sync.Mutexor a custom lock such asspinlock.SpinLock. -

Task Priority: GoPool supports task priority. Tasks with higher priority are processed first.

5.2 Is This GoPool Easy to Use?

- Download dependencies

|

|

- Use GoPool

|

|

I believe there is no need for any comments here, you must be able to understand what these lines of code mean just from the naming of variables, methods, etc.

- The AddTask() method can add tasks, and tasks are of the

func() (interface{}, error)function type; - Wait() waits for all tasks in the Pool to complete;

- Release() releases the Pool;

It’s too simple.

5.3 Dynamic Adjustment of Worker Numbers

Yes, the number of workers in the worker pool that handles tasks in GoPool can be dynamically adjusted. To enable the dynamic adjustment feature of the number of workers, you just need to pass a “minimum number of workers” in the NewGoPool() method:

|

|

This line of code is equivalent to initializing a pool with a number of workers between 50 and 100; when the gopool.WithMinWorkers(50) parameter is not added, it is equivalent to initializing a pool of a fixed size (here it is 100) by default.

BTW: The configuration method of GoPool here uses the “Functional Options Pattern”. For a detailed introduction to this configuration pattern, please read my other blog:

The algorithm for adjusting the number of workers is very simple:

- When the number of tasks accumulated in the global queue taskQueue exceeds 75% of the size of the workers pool, the size of the workers pool doubles (but does not exceed the specified maximum value).

- When the global queue taskQueue is empty, the size of the workers pool is halved (but not less than the specified minimum value).

Come on, show off the code:

|

|

All kinds of locks, loops, judgments, to be honest, it’s easy to make bugs when writing such an adjustWorkers() method by hand. Yes, this is not written by me, it’s all written by GPT-4. I’m just responsible for telling it the requirements and then reviewing the code it gives.

5.4 Task Timeout Handling

Sometimes we hope to add a time limit to the execution time of a task. After all, what if the task has a bug and gets stuck for three days and three nights. It’s also very easy to add a time limit to a task in GoPool:

|

|

It’s so refreshing!

When initializing GoPool, pass a gopool.WithTimeout(1*time.Second), and then when GoPool processes each task, it only gives it 1s time, and it will be killed if it is delayed.

The logic of timeout handling is not difficult, but it is not simple either. It uses syntax points such as context, goroutine, select, etc. Anyway, it is challenging to write it by hand. Let’s look at the code given by GPT-4:

|

|

The timeout logic is “configurable”, which means that by default, the task execution will not be “killed when it times out”. There is such a judgment in the code:

|

|

In order to ensure readability and maintainability, I can control the length of each function. When the logic of a function is so long that it needs to scroll the mouse to see it all, I will promptly ask GPT-4 to refactor this code.

5.5 Task Execution Error Handling

What if the task execution goes wrong? At first, I didn’t know what to do, as everyone’s tasks are different. But GPT-4 said that the caller can customize the “error handling process” through “callback functions”. It sounds good, so GoPool has added such usage:

|

|

Here in the NewGoPool() method, such a parameter is passed:

|

|

That is to say, GoPool supports passing an error handling function func(err error) through gopool.WithErrorCallback(). In this custom error handling function, you can choose to simply print the error information like this example, or you can choose to call the pool.AddTask() method again to re-execute this task. In short, the error is in your hands, and you can handle it as you wish.

There is a piece of error handling logic in GoPool:

|

|

That is, after executing the task, if the callback function errorCallback is not nil, then call pool.errorCallback(err)

5.6 Task Execution Result Retrieval

Sometimes we need the task to return a result after execution, but how to save this result when processing tasks concurrently? GoPool provides a callback function to support custom result processing methods:

|

|

The logic here is very similar to the previous “Task Execution Error Handling”, so I won’t go into too much detail. Here the result is of type interface{}, which means you can customize your own result format, and then decide how to handle this result in the function, whether it’s a simple print, or storing it in a certain Channel for the next step in your pipeline to handle.

5.7 Task Retry

Sometimes you hope that the number of tasks you submit can be successfully executed as many times as tasks, but tasks are not always 100% successful. However, retrying often solves the problem. At this time, you can set the number of retries for GoPool:

|

|

After setting gopool.WithRetryCount(3), your task will have 3 retry opportunities.

I don’t know if you still remember the executeTask() function posted earlier, there is a for loop inside to support the retry logic here:

|

|

5.8 Graceful Stop

In GoPool, the logic of Release is: After all the tasks added to the task pool through the AddTask() method are executed, the Pool can be released. The corresponding code is as follows:

|

|

Yes, I also pasted the Wait() method. The recommended usage of GoPool is:

|

|

So the Release() method should be executed after the Wait() method, so the closing logic is to wait until the taskQueue is empty, and then the taskQueue will be closed, which means that no new tasks will be added. Then when workerStack == minWorkers, it means that there are no running workers, all workers are idle (stacked), so their corresponding memory is released.

6. Test the Performance of GoPool!

I know you must be concerned about the performance of GoPool, maybe you don’t believe the performance data posted at the beginning. After all, the more fancy the features, the worse the performance. How can GoPool achieve the best performance on the whole network while supporting a bunch of features? Next, we will continue to use the test code written by GPT-4 to perform a wave of stress testing!

6.1 First Appreciate the Worker Pool in GoPool

Before posting performance data, I want to share a little surprise that GPT-4 gave me in this GoPool implementation.

In the implementation process of the worker pool, I just expected GPT-4 to implement a stack with slices for the worker pool, so that when a worker is needed, an idle worker can be obtained through the Pop() method of the stack, and after use, the Push() method is used to stack the worker. I imagined that GPT-4 would add a line of code to the goPool structure:

|

|

As a result, GPT-4 outsmarted me and gave these two lines:

|

|

In this way, the “stack” I want becomes a “stack of workers slice indexes”, and the stack and unstack operations are all moving indexes, a simple int type number, and then through this index, you can operate the specific worker object in the workers slice. These workers lying in a slice don’t need to run back and forth, they can shine and heat.

Pop() and Push() then became like this (of course, it’s still written by GPT-4):

|

|

So later on, GPT-4 had this usage in the task distribution function dispatch():

|

|

Brilliant, compared to the version I could write by tearing code by hand, the performance is obviously better.

6.2 Choose 2 High Star Golang Worker Pool Projects for PK

Searching for related keywords on GitHub, I found two similar projects:

1. pond

- Introduction in README: Minimalistic and High-performance goroutine worker pool written in Go.

- The current number of Stars is 932 (as of July 27, 2023)

2. ants

- Introduction in README: Library ants implements a goroutine pool with fixed capacity, managing and recycling a massive number of goroutines, allowing developers to limit the number of goroutines in your concurrent programs.

- The current number of Stars is 10.7k (as of July 27, 2023)

6.3 Write Stress Test Code to Test the Performance Difference of GoPool, Pond, and Ants under the Same Conditions

After flipping through the README files of the pond and ants projects, I roughly knew how to use them. So I created a new demo project locally, which contains a pool_test.go, and wrote these codes:

|

|

The corresponding go.mod file is as follows:

|

|

Next, we can get the throughput difference of the three pools at the million task level.

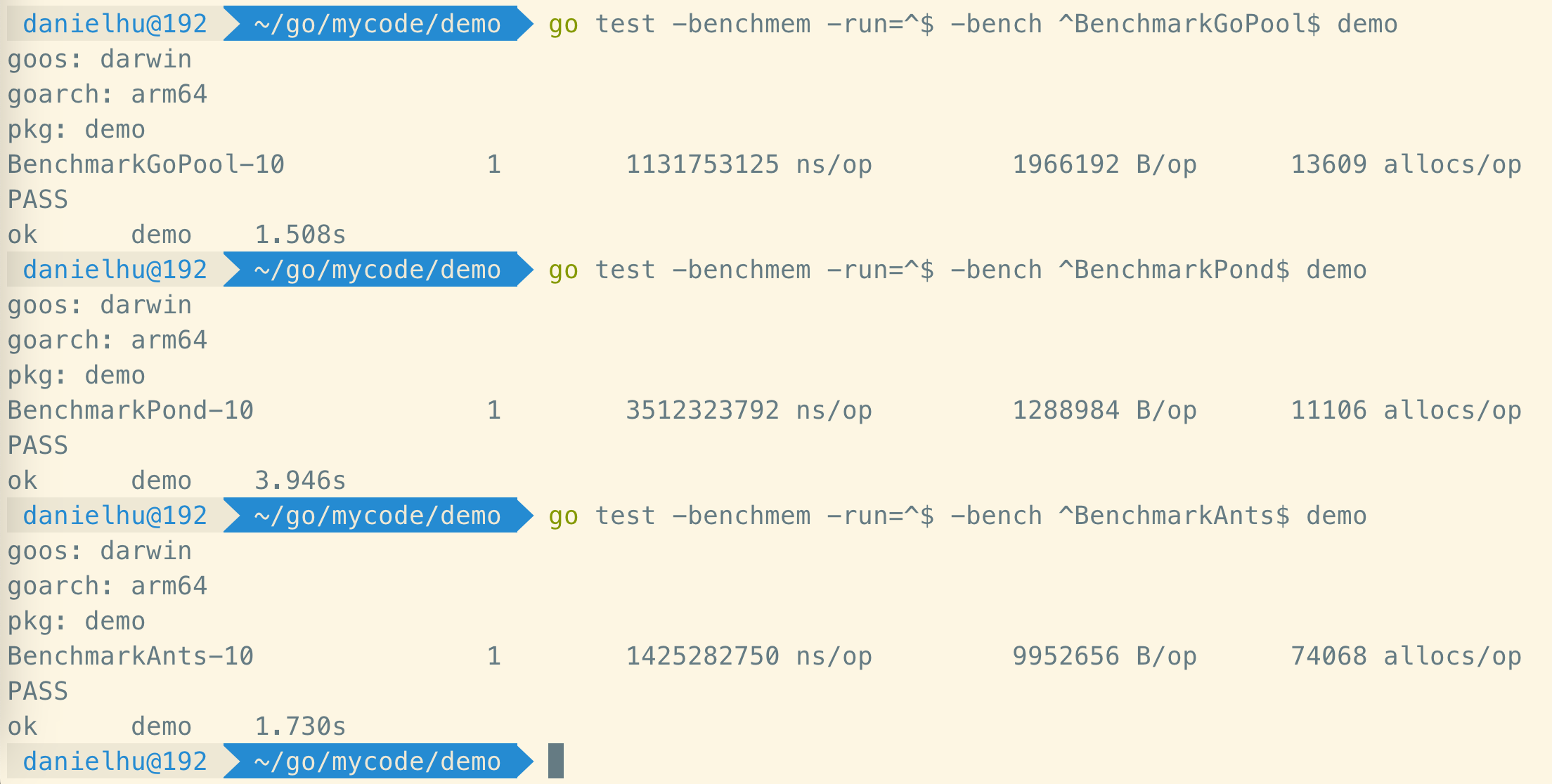

6.4 10k Concurrency, 1000k Task Volume Stress Test Results

As can be seen, in the case of a 10k pool capacity, the time to process 1000k (one million) tasks is:

- GoPool - 1.13s

- ants - 1.43s

- pond - 3.51s

The memory consumption is:

- pond: 1288984B = 1.23MB

- GoPool: 1966192B = 1.88MB

- ants: 9952656B = 9.49MB

The data is better than I imagined, and GoPool is the clear winner in overall performance!

- So we got the table at the beginning of the article, that is, with a million tasks and ten thousand concurrency, GoPool’s performance surpasses the GitHub ten thousand star project ants and the thousand star project pond:

| Project | Time to Process 1M Tasks (s) | Memory Consumption (MB) |

|---|---|---|

| GoPool | 1.13 | 1.88 |

| ants(10k star) | 1.43 | 9.49 |

| pond(1k star) | 3.51 | 1.23 |

7. Harness AI, Be the “New Programmer”!

I couldn’t hold back, and this article is heading towards ten thousand words.

I originally wanted to detail each round of prompts, each round of GPT-4’s answers in this article, and show you in the process which prompts GPT-4 can give better answers to, which specific problems are suitable for GPT-4 to solve, and which tasks GPT-4 doesn’t handle well. But obviously, even 20,000 words wouldn’t be enough, so I simply showed you the whole picture, the results, and how GPT-4’s work GoPool performs in terms of functionality and performance, to give you an intuitive impression of “what GPT-4 can do”. I will continue to update more articles in the future, detailing how to get GPT-4 to complete tasks such as code refactoring, test case writing, document completion, bug location and repair, etc., in the development process of GoPool.

Related articles will be continuously updated on DevChat’s WeChat public account “Simayi Intelligent Programming” and my personal public account “Talking about Cloud Native”, follow us to stay updated!

Overall, GPT-4 has indeed opened Pandora’s box, and it can be foreseen that in the near future, a large proportion of the code in various software projects will be generated by GPT-4. I don’t know if you have experienced the fear period of ChatGPT, worrying about being replaced by AI.

After a few months of “dancing with GPT-4”, I found that it is still too early for AI to replace programmers, at least GPT-4 can’t do it (I’m not sure if GPT-5 or GPT-6 can do it). When you can clearly describe your needs, if these needs are not very complex logic, then GPT-4 can often give very beautiful code. But GPT-4 is not always correct, sometimes there may be a bug in the 100 lines of code it gives, and if you can’t understand the code generated by GPT-4, then even though there is only one line of error in these 100 lines of code, but it can’t run, these 100 lines are worthless to you. On the contrary, if you can find this line of error, then GPT-4 is equivalent to helping you write 99 lines, what a great efficiency improvement!

In addition, due to the limitation of context size, you can’t send very long code to GPT-4, nor can you let GPT-4 write very long code for you at once. So when the project is a little more complex, “people” still have to “sit in the driver’s seat”, and GPT-4 can only sit in the “co-pilot’s seat”, be your navigator, chat with you, answer your questions, if you understand, she is valuable; if you don’t understand, then she is noise, is dead weight, can only slow down your driving speed.

In short, the best posture at present is to ride AI, harness AI, use the power of AI to improve your work efficiency, and be a “new programmer” who “can use GPT to write code”!

By the way, the prompts used to generate the GoPool project and the answers given by GPT-4 in each round have been uploaded to pro.devchat.ai.

Finally, remember that the entrance to DevChat’s wool-pulling is: Click here to register for free